Boost Your Business with Advanced MediaPipe Solutions

Leverage Google's MediaPipe, an open-source framework, to build portable, real-time multimodal ML pipelines with quick integration, customization, and deployment across platforms. As of 2025, it supports upgraded solutions like Face Landmarker and Hand Landmarker, driving efficiency in perception-heavy industries like AR, health monitoring, and interactive AI.

What is MediaPipe?

MediaPipe is Google's open-source project providing cross-platform APIs, libraries, and tools for applying AI and ML techniques in applications. It enables building real-time multimodal ML pipelines for perception tasks across vision, text, and audio, with pre-trained models, customization via Model Maker, and benchmarking in MediaPipe Studio. Updated in September 2025, it includes legacy upgrades and integration with Google AI Edge Portal for scalable Edge AI benchmarking.

Why Choose Our MediaPipe Services?

MediaPipe Tasks Integration

Cross-platform APIs and libraries for deploying ready-to-run solutions on Android, Web, Python, and iOS with TensorFlow Lite and OpenCV.

Pre-Trained Models & Customization

Access Google's pre-trained models and use Model Maker to fine-tune with user data for domain-specific perception tasks.

Multimodal ML Pipelines

Build efficient pipelines fusing vision, text, and audio for real-time applications like object detection and text classification.

Scalable Deployment & Benchmarking

Deploy across devices with MediaPipe Studio for visualization and Google AI Edge Portal for large-scale benchmarking as of 2025.

How MediaPipe Development Works

Build portable, real-time ML solutions through Google's streamlined framework.

1

Assess: Identify use cases like face detection or audio classification, leveraging MediaPipe's pre-built solutions.

2

Design: Architect pipelines using MediaPipe Tasks, graphs, and multimodal fusion for cross-platform compatibility.

3

Develop: Implement with MediaPipe APIs, customizing models via Model Maker on datasets for vision or audio tasks.

4

Test: Validate using MediaPipe Studio for real-time evaluation and accuracy on diverse platforms.

5

Deploy & Optimize: Launch on target platforms with Google AI Edge Portal benchmarking, refining for 2025 Edge AI scalability.

Key Features & Capabilities

Cross-Platform APIs

MediaPipe Tasks for Android, Web, Python, and iOS deployment with unified interfaces.

Pre-Trained Models

Ready-to-run models for vision (e.g., object detection), text, and audio classification tasks.

Model Customization

MediaPipe Model Maker for fine-tuning with user data to enhance accuracy in specific domains.

Real-Time Multimodal Pipelines

Optimized graphs for fusing vision, text, and audio in real-time perception applications.

MediaPipe Studio

Browser-based tool for visualizing, evaluating, and benchmarking ML solutions in real-time.

2025 Edge AI Enhancements

Integration with Google AI Edge Portal for scalable benchmarking and upgraded legacy solutions like Hand Landmarker.

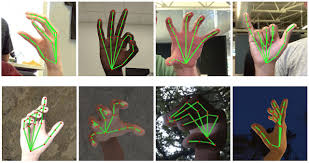

MediaPipe in Action

Experience Google's real-time ML capabilities with our advanced MediaPipe implementations

Solutions & Use Cases

Google's MediaPipe powers cross-platform AI solutions, from vision tasks to audio classification, enabling real-time deployment and customization for 2025's Edge AI demands.

Augmented Reality

Face Landmarker and Hand Landmarker for immersive AR experiences and virtual interactions.

Fitness & Health

Pose Landmarker for real-time body tracking in workout apps and telemedicine vital monitoring.

Gesture Interfaces

Hand gesture recognition for gaming controls, accessibility tools, and smart device interactions.

Object Detection

Real-time object tracking and classification for security, retail, and autonomous systems.